Some recent evolutions in Java made me think about the history of Java and how I looked at the development of the language. The move from Java EE to EE4J and beyond, and the opening of client-side Java are clear signs that Java is a community platform rather than a company-controlled language.

THE EARLY DAYS

When I started my PhD in Applied Physics at Delft University of Technology more than 20 years ago, one of the main challenges was to combine computations and visualizations of lots of data.

My work was done as a collaboration between the faculties of Aerospace Engineering and Applied Sciences, and those groups are used to deal with huge amounts of data. Most of the work was done using Fortran or C/C++. I still love these languages and I admire the amazing work people have done with them.

But around that time (1996), there was a new language that introduced me to Object Oriented programming, and the advantages of encapsulation, reusability, multi-threading, security,… and that was Java.

At that moment, there were 2 obstacles preventing me from using Java for my PhD thesis: it didn’t work on Linux (I never managed to fluently move from the Apple ][ to the Macintosh without losing productivity so I converted to Linux) and it was slow.

I joined the Blackdown team, a bunch of people with the goal of porting Java to Linux, and we fixed that first issue. I still remember the weird looks and comments we got in those days: “Linux? Seriously? Who would ever want to run Java on Linux?”. Well, we wanted to do that so we did it.

I also do remember that in the initial days, we occasionally got blobs of new code from Sun Microsystems. The first time that the Swing code was added, I was very hopeful as I saw funny animations that might help me with my PhD.

I compiled the sources on my Sparc Station (I think it was a sparc 10) at work. It took 23 hours and 45 minutes to complete. Pretty long, but that’s ok — the end developer doesn’t have to compile Swing from code.

However, the performance of Java in the late nineties, and the lack of a real great UI framework forced me to use Fortran and C and I used the excellent Seismic Unix package (https://en.wikipedia.org/wiki/Seismic_Unix) for my visuals.

EARLY 2K

Gradually, the HotSpot compiler became better and better, and for a number of reasons the Java programming language made a lot of sense for enterprise backend development. I have been following the Java Enterprise development since iPlanet, the Sun One appserver, the release of the Spring Framework and the GlassFish Application Server. Writing Enterprise Applications in Java became the standard, for very good reasons. Java is available on almost all enterprise operating systems, and the platform and tools drastically increase developer productivity.

While doing lots of work on the enterprise side, I also spent time on embedded and mobile development as well. I wrote software for a telematics system, and we expanded that to mobile devices in general. Well, the “in general” is not completely accurate. At that time, the mobile landscape was pretty chaotic. We had the software that worked on the embedded telematics device also working on the Compaq iPAQ, the Sharp Zaurus (bought at JavaOne 2002) and a bunch of other devices.

There were two very big hurdles that we had to face:

1. there was no standard API for Java development in general and device management in particular

2. there was no way we could automatically provision our apps to end-user devices. Telco’s and device manufacturers kept control of the local apps.

The business model of the telematics company I worked for was mainly server-side focused: allow software companies to create telematics software (e.g. infotainment), and manage the delivery of this software in a secure, controlled and managed way to the on board telematics devices. That requires lots of business tools like billing, logging, administration,…

While the business model clearly was server-focused, everybody realized that in order to get data to the server, you need clients to generate this data, and send it to your server.

In general, I’ve been advocating for a Mobile First – Cloud First strategy for a long time. If you want incoming traffic to your cloud, you better start where the data originates from.

TODAY

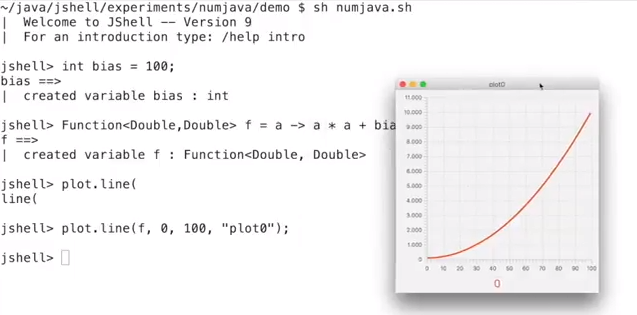

The mobile and embedded world have changed. From a technical point, Java on the client has never been in a better shape.

We have Java 9 working on desktop, laptop, mobile, embedded.

We have JavaFX as a modern, cross-platform UI framework that works on desktop, laptop, mobile, embedded, leveraging hardware accelerated rendering.

Java developers are now capable of not only writing enterprise applications, but also controlling client apps that generate the data that feeds their enterprise applications.

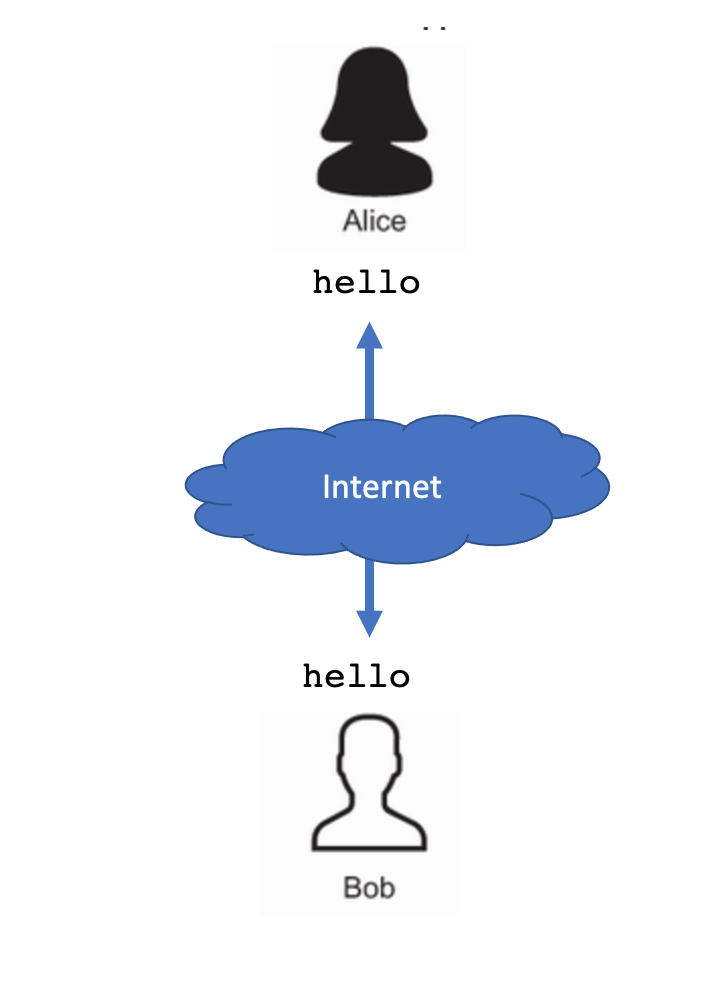

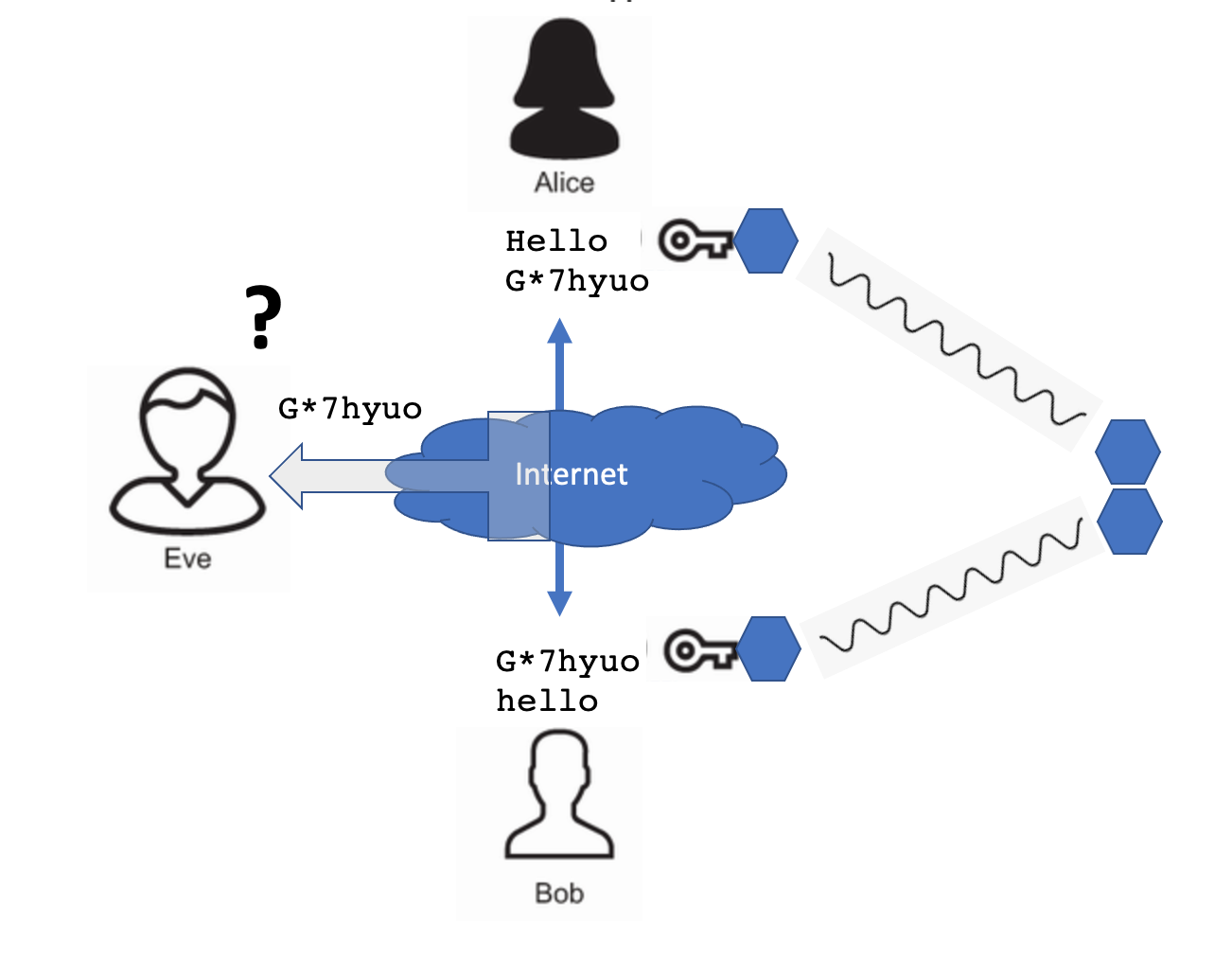

The possibilities of Java on the client are immense. One of the key features of Java is that security is built-in from day 1. This is so much needed in todays client development. Cyber security is one of the things that worries me very much. Client developers have a huge responsibility in this area, and Java helps them securing the application and context.

Java is also very powerful, and using JNI you can leverage native libraries that unlock features like deep learning. You can write apps using deep learning algorithms using the same code on mobile and on clients. Doing some local processing on the client can eliminate the need of sending raw (privacy sensitive data) to servers.

As Java became bigger, it became obvious that there is no single company that can do all the development in all the corners of Java. When Oracle acquired Sun, it quickly became clear that Oracle is not interested in all aspects of Java. Oracle is a cloud-focused company, hence their investments in Java focus on Java for the Cloud.

But there are many other companies that benefit from Java in other areas, or that want to realize a link between client development in Java towards Enterprise development in Java on their clouds.

The Java Client ecosystem is very much alive and active. Every month, more than 30,000 developers download Scene Builder from Gluon. Scene Builder is a tool that allow developers to easily create JavaFX user interfaces in an intuitive way.

There are a number of excellent frameworks and libraries, that are created by many enthusiast developers. Every month, 2500 developers download the Gluon Mobile plugin for their IDE, allowing them to create cross-platform Java apps for iOS and Android, written in 100% Java. And this number is increasing month by month.

While over the years, Oracle has invested a lot in Java on the Client, it is clear that the wider community is now taking over.

As part of this movement, I became the project lead for the OpenJDK Mobile project, where the goal is to make sure the OpenJDK classes and VM code works on mobile platforms (iOS/Android).

In order to encourage interested third parties to be involved in the development of JavaFX, we created a mirror at GitHub at https://github.com/javafxports/openjdk-jfx.

This mirror is automatically pulling changes from the official OpenJFX repository, so its master branch is up to date with the official repository.

Developers can now fork the very latest JavaFX code, do their own experiments,

and hopefully contribute something back. Companies can evaluate new features in a more flexible way.

In summary, Java is an amazing thing. It’s a platform and a language, it works on enterprise cloud systems, on desktop, mobile, and embedded. It doesn’t fix all of the problems in IT, but one of its biggest assets is the fact that it is available everywhere and allows you to create robust, secure apps, leveraging tons of third party tools (IDE’s) and libraries. It is an ecosystem that is much bigger than a single company.

And if you want to be part of the Java success, you can just do it.